The automotive industry is in the midst of a profound transformation, driven by the concurrent rise of electrification, autonomous driving technologies, and the shift toward Software-Defined Vehicles (SDVs). These forces are not only reshaping vehicle design and engineering but also redefining the role and requirements of in-cabin audio systems. As a result, automotive manufacturers are re-evaluating their audio architectures to meet new technical demands and consumer expectations.

Tesla, unencumbered by legacy architectures and entrenched supply chains, has emerged as a leader in embracing this new paradigm. Its software-centric approach has become a model for other original equipment manufacturers (OEMs) seeking to evolve toward more agile and integrated platforms. Many of the best practices discussed in this article have been implemented by Tesla for several years. As the industry moves in this direction, the automotive audio landscape is poised for dramatic change.

Electrification and Autonomy

The transition from internal combustion engines (ICE) to battery electric vehicles (BEVs) has introduced a new set of challenges and opportunities for in-vehicle audio. One of the most notable effects is the transformation of Noise, Vibration, and Harshness (NVH) dynamics. The quiet operation of BEVs — no longer masked by engine noise — makes ambient and road-generated sounds more perceptible to occupants. This heightened sensitivity is driving increased demand for active solutions such as Road Noise Cancellation (RNC). In addition, BEVs require Acoustic Vehicle Alerting Systems (AVAS) to ensure pedestrian safety, while continuing to support traditional audio functions including media playback, telephony, voice assistant integration, and system chimes.

While fully autonomous vehicles remain elusive, Level 2 and Level 3 Advanced Driver Assistance Systems (ADAS) are already becoming mainstream in new vehicles. These systems rely heavily on audio cues to communicate critical information to the driver. Directional chimes and context-aware alerts are essential components, requiring precise spatial audio processing and integration with vehicle sensors to provide timely and intuitive feedback. In these situations, audio is crucial for the vehicle’s safe operation and is not used for entertainment.

The Emergence of Software-Defined Vehicles

Perhaps the most transformative development in the automotive sector is the evolution toward SDVs. While software has long been used in automotive systems, it was traditionally distributed across numerous discrete Engine Control Units (ECUs) developed by independent design teams and domain experts. The SDV model consolidates these functions into centralized compute platforms — often based on powerful system-on-chip (SoC) silicon platforms — demanding a fundamental re-architecture of how audio systems are developed, deployed, and maintained.

Companies that make this shift experience significant cost savings. External DSPs have traditionally been used to implement audio features with some premium vehicles requiring as many as four external DSPs. These parts are expensive and the processing they provide can be moved onto the central SoC. This transition requires an audio framework to allow siloed teams for audio, voice, and AVAS to cooperate seamlessly. Amplifiers will no longer perform processing but will simply be output devices. As OEMs assume greater control of the in-cabin experience, they are increasingly responsible for the development of custom audio features and user interfaces, requiring dedicated audio experience teams and long-term planning for feature roadmaps.

These trends are enabled by standard audio networks that connect devices. Automotive Audio Bus (A2B) from Analog Devices and Ethernet Audio Video Bridging (AVB) are two prominent in-vehicle networking technologies tailored for transmitting audio and data with low latency and high reliability, but they serve different roles and use cases. A2B is a low-cost, low-latency digital audio bus designed specifically for point-to-multipoint audio distribution. It allows microphones, speakers, and amplifiers to be connected in a daisy-chain topology with a single twisted-pair cable, which reduces wiring complexity and weight — key benefits for compact and cost-sensitive vehicles.

However, A2B is limited in bandwidth and primarily supports audio data, making it less suitable for systems requiring video or broader data networking. In contrast, Ethernet AVB is a standards-based extension of Ethernet that provides synchronized, streaming of audio and video over a more scalable network. It supports higher bandwidth and integrates easily with other Ethernet-based systems, making it ideal for premium infotainment and ADAS systems. The trade-off is higher cost, complexity, and power consumption.

Continuous Integration (CI) and Over-the-Air (OTA) updates are becoming standard, placing a premium on simulation, testing, and automated integration pipelines. Developers must now work across organizations and corporations within shared software frameworks, often collaborating directly with SoC vendors to ensure compatibility and performance. Furthermore, the traditional roles of Tier 1 hardware suppliers are shifting. With contract manufacturers (CMs) and original design manufacturers (ODMs) entering the space, many suppliers are transitioning toward software-centric business models. This new ecosystem opens the door for OEMs to monetize their software platforms through premium audio features and personalized content experiences.

The decision between developing a proprietary audio framework in-house or utilizing an existing one is crucial for OEMs and Tier 1 suppliers. While a custom-built framework provides tailored solutions and a competitive edge, it demands substantial ongoing investment and a dedicated engineering team. Organizations must weigh the benefits of complete customization against the resource allocation required for framework development, especially considering the potential need for multi-SOC and DSP support, and third-party IP integration. Embracing a widely supported, industry-standard framework may be more pragmatic, allowing engineers to focus on crafting innovative audio experiences rather than building and maintaining the underlying infrastructure.

Audio Weaver: Enabling the Next Generation of In-Vehicle Audio

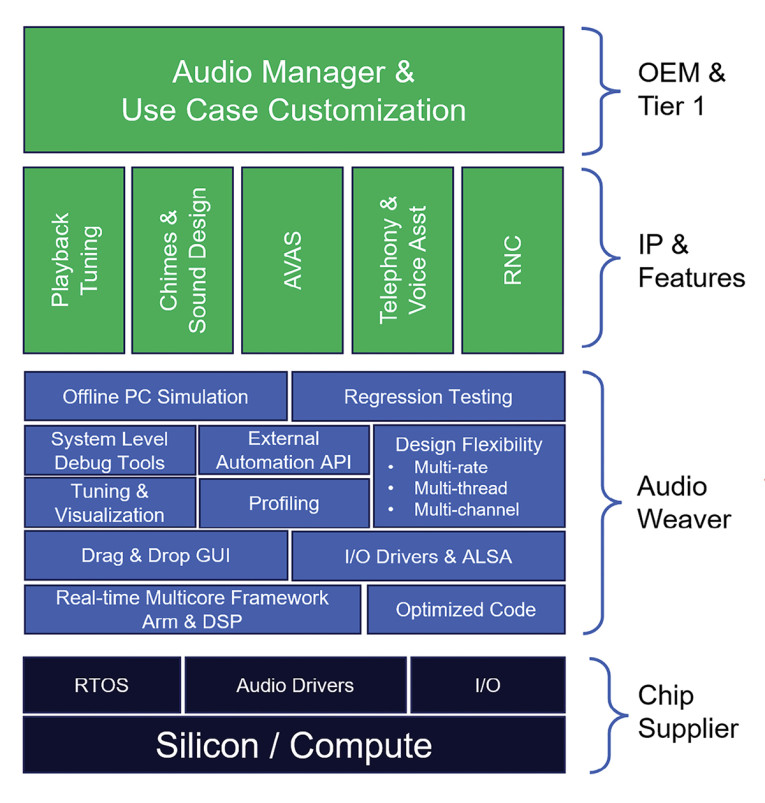

In the context of centralized compute platforms and modular audio development, Audio Weaver serves as a critical middleware solution for SDVs. Its modular architecture, combined with a large library of audio processing components, allows OEMs and Tier 1 suppliers to build adaptable audio systems that meet both functional and performance requirements. By decoupling audio development from hardware-specific constraints, this framework streamlines system integration and significantly reduces time-to-market.

Industry adoption has grown, with Audio Weaver now supported by major SoC vendors, Tier 1s, and third-party IP providers. The platform’s pluggable API architecture supports integration of custom features from OEMs or partners, fostering interoperability and reuse across programs (Figure 1).

At the core of the framework is a deterministic, real-time scheduler capable of distributing audio tasks across multiple cores. The platform is optimized for both DSP and ARM architectures, with instruction-set-specific enhancements to ensure efficient execution. Integration work is often done in close collaboration with SoC providers to ensure low-level compatibility and performance.

The module library — comprising more than 600 building blocks — covers a wide range of functionality. Fundamental operations such as mixing, filtering, and delay processing are complemented by advanced modules for beamforming, echo cancellation, and sound synthesis. These are used to support playback, AVAS, voice communication, and context-aware alerting.

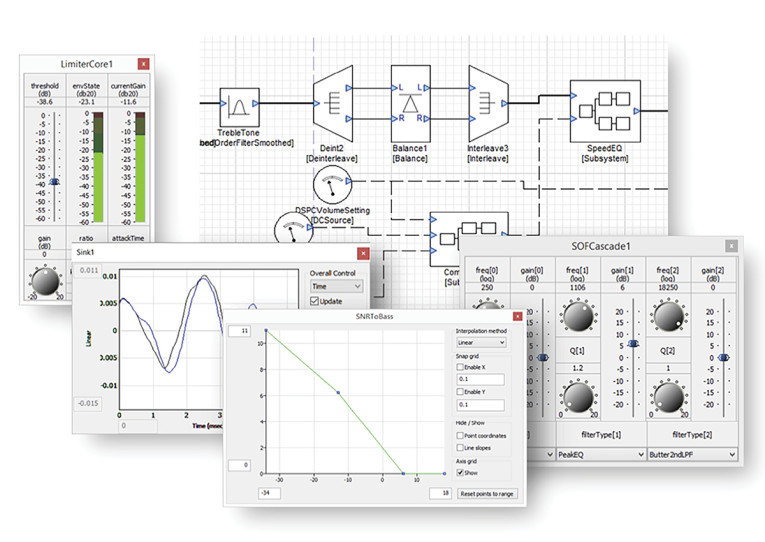

System design is performed using a graphical editor, where modules can be combined to form signal chains and feature blocks (Figure 2). The framework supports multirate processing, variable block sizes, and multichannel audio paths. Built-in “inspectors” allow developers to monitor real-time signal values and adjust tuning parameters dynamically during development.

Profiling capabilities are integral to the platform and are used to validate real-time performance. Detailed resource metrics — including per-module megahertz and memory consumption — are gathered directly from the target hardware. Long-duration profiling tracks both average and peak system load to ensure robustness under dynamic conditions.

For development and validation, a Native PC Target mode supports real-time audio processing on standard Windows machines using DirectX or ASIO. This simulation environment supports rapid prototyping, module integration, A/B testing, and regression validation — independent of embedded target hardware. In practice, most feature development is completed in this mode, with embedded deployment used for final integration and peripheral validation.

Automation is supported via a scripting API that enables regression testing, continuous integration workflows, and external tuning tool integration. The same interface is available in both PC simulation and target-connected modes, supporting a unified development process.

For extending system functionality, developers can use the Custom Module API to introduce proprietary processing. Modules are created using MATLAB and C, and a built-in wizard streamlines the process of wrapping new algorithms within the framework.

Machine learning (ML) capabilities have recently been added, with runtime support for ONNX and TensorFlow Lite Micro (TFLM). These inference modules enable real-time deployment of ML-based audio features using standard model development workflows. Developers can leverage the same tuning and profiling infrastructure to optimize and validate machine learning features alongside traditional audio processing.

Finally, Audio Weaver is part of a growing ecosystem of pre-integrated third-party IP. Partnerships with firms such as Fraunhofer, Dirac, Arkamys, Bose, Impulse Audio Labs, AIZip, and Intelligo, among others, allow developers to evaluate and deploy advanced audio technologies with minimal integration effort. By providing a unified execution environment, the platform ensures that all components — from core audio processing to premium IP — interoperate reliably within complex automotive architectures.

Audio Weaver on Snapdragon (AWE-Q)

One of the most notable recent implementations of Audio Weaver is its integration with the Qualcomm Snapdragon SA8255 platform, branded as “AWE-Q.” Designed specifically for high-performance automotive applications, AWE-Q represents a major leap forward in audio processing capability (Figure 3). It supports multicore processing across three Hexagon DSPs and multiple ARM cores, enabling efficient distribution of tasks to meet the demands of increasingly complex audio pipelines. This work is also being applied to next-generation SA8397 and SA8797 SOCs.

AWE-Q allows configuration of the underlying hardware through a text configuration file as well as through the Designer GUI. TDM ports, ALSA devices, memory sizes, and processor clocks can all be easily configured. A new Event module allows asynchronous notifications to be sent from the DSP to the high-level OS. AWE-Q also provides full access to QXDM logging capabilities. Photo 1 shows the Qualcomm Snapdragon Ride SX 4.0 Automotive Development Platform. The Ride SX 4.0 allows for rapid creation of customizable automotive cockpit solutions for the SA8775P or SA8255P SoCs. The platform is designed to enable enhanced testing and debugging of the chipsets.

Changes Coming to the Supply Chain

We are at the forefront of a major shift in audio technology — transitioning from traditional DSP and rule-based algorithms to AI-driven solutions powered by data and training. This evolution began with voice processing, where AI-based noise reduction has significantly improved telephony and communication systems. Today, many playback algorithms still rely on hand-tuned heuristics developed over years of testing, but they are approaching the ceiling of their performance potential.

The next wave of innovation will be driven by applying AI to playback, unlocking new levels of audio quality and personalization. Emerging solutions from multiple vendors include AI-powered volume management, intelligent upmixing, speed-adaptive EQ, and dynamic equalization. There’s even growing interest in AI-based voice removal for karaoke and other creative applications. This is just the beginning of a smarter, more adaptive era in audio technology.

We’re witnessing the emergence of a new category of company in this evolving audio landscape. As future in-vehicle audio experiences become increasingly software-defined, OEMs are seeking a single entity to take ownership of the end-to-end audio experience. This includes curating best-in-class IP, integrating it into the broader audio software stack, fine-tuning key features, and shaping the overall sonic signature of the vehicle. We refer to this new class of partner as an “Audio Software Tier 1” — a company that assumes the role and responsibilities traditionally held by hardware-focused Tier 1 suppliers. In this new model, OEMs will source commodity components such as loudspeakers, amplifiers, and microphones, connect them via standardized audio networks, and rely on the Audio Software Tier 1 to bring it all together into a cohesive, high-quality experience.

OEMs will also need to reorganize as part of this transition. Instead of having siloed design teams for playback, voice, and safety features, OEMs will need to create a centralized audio team that collaborates to craft the overall customer experience. These teams will include audio, software, hardware, and UX/UI designers. The teams will manage processor resources, allocate features across cores, and manage updates and new features.

Shaping the Future of Automotive Audio

As the industry transitions toward software-centric architectures, tools such as Audio Weaver will play a central role in enabling innovation and differentiation. Its ability to bridge low-level hardware capabilities with high-level audio features ensures that automotive OEMs can keep pace with the rapid evolution of consumer expectations and technology standards. With its scalable, future-proof design, Audio Weaver is not only streamlining development today but also laying the foundation for the next generation of intelligent, immersive in-vehicle audio experiences. aX

This article was originally published in audioXpress, June 2025

About the Authors

Paul Beckmann is the Co-Founder and Chief Technology Officer of DSP Concepts, a world-renowned audio DSP expert and the inventor of Audio Weaver. Leveraging his many years of experience developing audio products and creating algorithms for audio playback and voice, Paul is passionate about teaching, and has taught industry courses on DSP, audio processing, and product development. Prior to founding DSP Concepts, Paul spent 9 years at Bose Corp. and was involved in R&D and product development activities.

Paul Beckmann is the Co-Founder and Chief Technology Officer of DSP Concepts, a world-renowned audio DSP expert and the inventor of Audio Weaver. Leveraging his many years of experience developing audio products and creating algorithms for audio playback and voice, Paul is passionate about teaching, and has taught industry courses on DSP, audio processing, and product development. Prior to founding DSP Concepts, Paul spent 9 years at Bose Corp. and was involved in R&D and product development activities. John Whitecar is the VP of Product Management, Automotive at DSP Concepts where he works with world-class auto makers to design cutting-edge automotive audio experiences. John’s experience in automotive infotainment architecture, system design, vehicle acoustics, digital receiver, and digital signal processing spans more than 40 years and has impacted the audio experience in millions of products. Before joining DSP Concepts, John was Senior Manager Wireless Engineering, Principal Engineer at Tesla.

John Whitecar is the VP of Product Management, Automotive at DSP Concepts where he works with world-class auto makers to design cutting-edge automotive audio experiences. John’s experience in automotive infotainment architecture, system design, vehicle acoustics, digital receiver, and digital signal processing spans more than 40 years and has impacted the audio experience in millions of products. Before joining DSP Concepts, John was Senior Manager Wireless Engineering, Principal Engineer at Tesla.